One of the hardest things to do when you’re spinning up a growth content process is figuring out what to tackle and in what order.

The most important foundations for any business are its positioning, its audience, and its message, but there are dozens or hundreds of ways to test and improve all of those things. Which comes first?

The common answer is you either go with your gut or with the HiPPO’s gut. Especially in the early days of a new department or company, the temptation is to simply fire away as quickly as you can just so you’re doing something.

That’s a mistake.

One, because you don’t build a good habit for when you grow your team. Two, if you don’t think rationally about tests and experiments, you’re not using data-driven decision making at the very start of your growth process, throwing everything else into a certain suspect light.

ICE decision making matrix

If you’ve been doing growth marketing for a while, you will know of ICE as an acronym for how to score experiments and A/B testing. The letters stand for “Impact, Confidence, and Ease.” In short:

- Impact is how much of a change you think this experiment could bring

- Confidence is how likely you think it is to have a positive result, and

- Ease scores how much work you think it would be to implement.

The ICE matrix scores each letter on a 1 – 10 basis and adds the score together, resulting in a score from 1 – 30. Especially low-scored tests probably never get tried, and higher score tests will be prioritized.

I like the ICE matrix and have used it for a while, but I find that it has its shortcomings.

- It provides priority implicitly, but doesn’t make room for timeline effects like seasonality of sales or urgency of learning something before a new release.

- I’ve been doing growth marketing for more than 15 years, and I am still wrong more often than I am right. That means that confidence that something will have a positive effect is, honestly, a guess with very little real world ties to results.

- Ease score tends to be a little bit vague.

After a few years using ICE to score my backlog, I developed a slightly different model I call PIER.

PIER decision making matrix

I’ve written about it before, but PIER is an acronym for “Priority, Importance, Ease, and Result.” What do these mean?

- Priority lets you decide the urgency of a task as you score it. (1 – 6 points)

- Importance score discusses how well this experiment aligns with objectives for the marketing, growth team, or overall business goals. (1 – 5 points)

- Ease describes how much effort an experiment is estimated to require (1 – 5 points), and

- Result is the likelihood that this experiment will produce a measurable result—good or bad. (1 – 5 points)

The scoring scale for PIER decisions is on a 1 – 5—although Priority has a 6 point scale to help weight the urgency of a task a little higher than the other elements. Fill out all of the scores for each element in the matrix and then multiply them together to get the end result. You will have a score from 1 to 750.

When you’re scoring an experiment on the PIER system, you should get more than one person to submit scores for each experiment, add everyone’s score for each element together, and then divide by the number of people who scored the experiment.

How to better calculate Ease in PIER scoring

“Ease” is key in ICE scoring, but it’s a little bit squishy. How do you estimate Ease? Everyone would score it a bit differently. I came up with a good standardized system for scoring that works well for growth or marketing teams.

For every team outside of the team who needs to touch a task, I subtract 2 points. I also deduct a point for every 10 hours of work I figure it will take to execute. If an experiment requires no dev work and less than 10 hours of work, score it a 5 (really easy!). If it requires a developer, a sales engineer, and someone from the Finance team and will take more than 40 hours of work to pull off overall, well, that’s probably not a good experiment to undertake. Descope and try again.

Calculating the Result Score

It’s almost impossible to be consistently correct about the positive impact about a test. On the other hand, it’s pretty easy to know if you’ve got instrumentation prepared that will be able to detect if you had a result, and you can get a good estimate for if you’ll have enough traffic to provide any kind of clear signal which side of the test performed better.

There are times you need to run a test even when you don’t have all of the analytics or reporting that you’d like to have in order to be sure that you will know what the outcome of that test is—that’s what Priority and Importance are for—but in general you should avoid tests where you might not be sure just exactly what the outcome was.

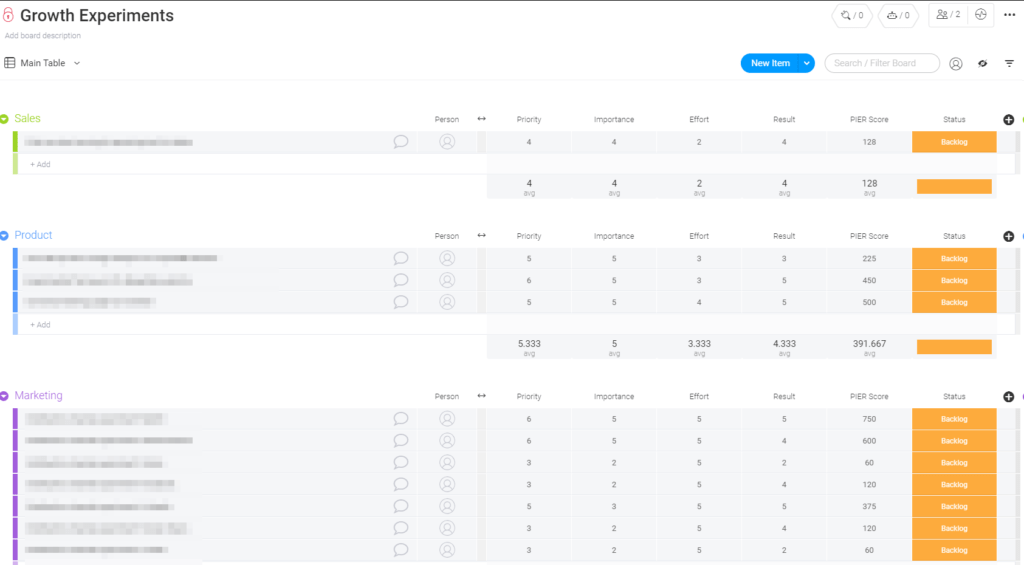

When you’re ready to use either ICE or PIER, it’s good to open up your task tracking tool and drop all of the experiments that you’re considering directly in there:

What do you think?

With that in mind, which system do you like better? ICE for its simplicity or PIER for its greater rigor and focus on using data to drive decisions? Hit me up at @trevorlongino on Twitter or drop a note below and let me know. 🙂